Live Face Detection and Oral Temperature Extraction with Thermal Imaging

Differenciate the live face and face pictures, read your oral temperature via conversations

Abstract

The frontal cameras on mobile devices are pervasive that makes face authentication become a convenient way to authenticate access. However, one of drawbacks, it's vulnerable to be broken by placing a face picture in front of camera. How to differentiate a live face or a face picture becomes a challenge for the applications of face authentication. From our findings, the facial temperature distribution is unique to each person, which could be extracted by thermal camera. Here, we propose an algorithm to extract facial thermal signatures for identity recognition and apply this idea for live face recognition.

Patents

Abstract

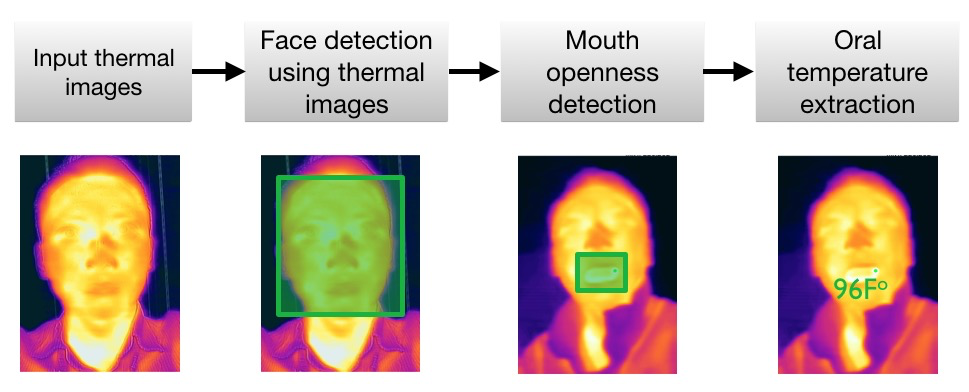

A method, system, and computer product for detecting an oral temperature for a human include capturing a thermal image for a human using a camera having a thermal image sensor, detecting a face region of the human from the thermal image, detecting a mouth region on the face region, detecting an open mouth region on the mouth region, detecting a degree of mouth openness on the detected open mouth region, determining that the degree of mouth openness meets a predetermined criterion, and detecting the oral temperature, responsive to determining that the degree of mouth openness meets the predetermined criterion.

Abstract

A computer implemented method includes capturing, via a camera, one or more digital images of a face of a person representative of blood circulation of the person, collecting context information via one or more processors corresponding to the person contemporaneously with the capturing of the one or more digital images, labeling, via a trained individual health model executing on the one or more processors, the one or more digital images based on the blood circulation represented in the image and the collected context information via the trained individual health model that has been trained on prior such digital images and context information; and analyzing, via the one or more processors, the one or more labeled digital images to generate a health index of the person.

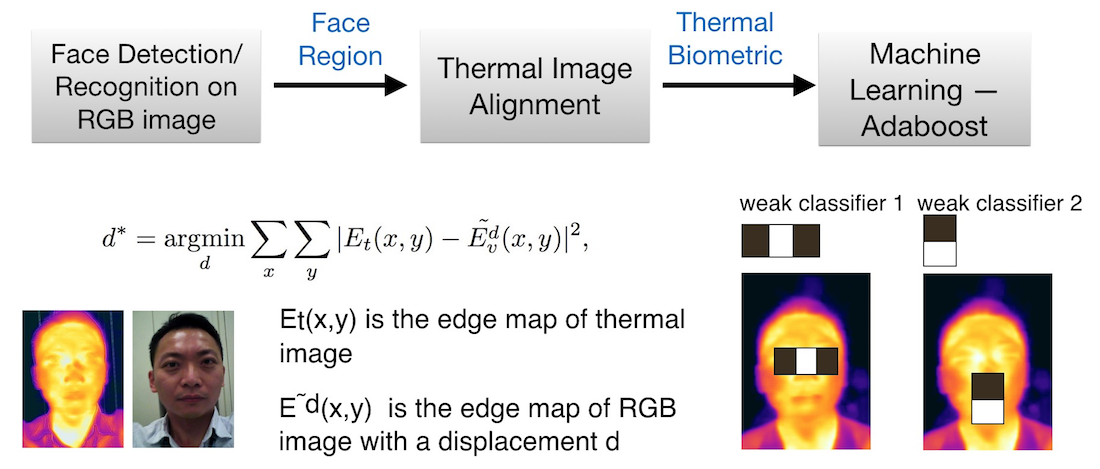

Method and apparatus to identify a live face image using a thermal radiation sensor and a visual radiation sensor

Abstract

A method, system and computer program product are disclosed that comprise capturing first image data of a person's face using at least one sensor responsive in a band of infrared wavelengths and capturing second image data of the person's face using the at least one sensor responsive in a band of visible wavelengths; extracting image features in the image data and detecting face regions; applying a similarity analysis to image feature edge maps extracted from the first and the second image data; and recognizing a presence of a live face image after regions found in the first image data pass a facial features classifier. Upon recognizing the presence of the live face image, additional operations can include verifying the identity of the person as an authorized person and granting the person access to a resource.

Demo Videos

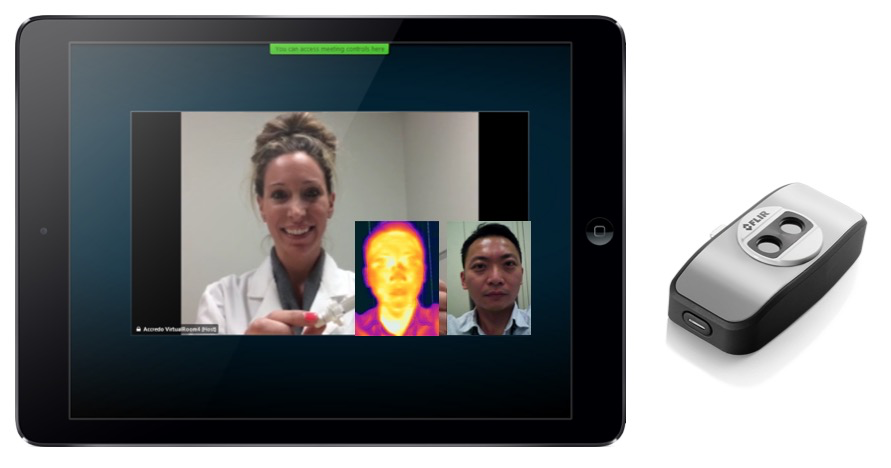

Live face detection and distingquish the real face v.s. picture

If you cannot see the video below, click the link https://www.youtube.com/embed/MhDEsSfcPgE

Oral Temperature Extraction

It has been proven that the oral temperature is one of significant body signals for healthcare diagnosis. The difficulty for extracting oral temperature is to remove the bias from user's background temperature. Here we propose an algorithm which can precisely extract oral temperature by compensating the background temperature. Below is the proposed processing flow. With the proposed algorithm, a patient's oral temperature can be extracted during the conversation, which makes the remote healthcare become more practical.

Remote Detection for Oral Temperature

A doctor can read your oral temperature via conversations.